Kaggle challenges us to learn data analysis and machine learning from the data the Titanic shipwreck, and try predict survival and get familiar with ML basics.

So, this material is intended to cover most of the techniques of data analysis and ML in Python, than to properly compete in Kaggle. That is why it following the natural flow of ML and contains many texts and links regarding the techniques, made your conference and references easy. as it can be extended over time.

In this way the material can be used for consultation and apply the methods to other similar classification cases, but for its application in the competition, or even to a real case, it will be necessary to make some choices and changes.

Competition Description:

The sinking of the RMS Titanic is one of the most infamous shipwrecks in history. On April 15, 1912, during her maiden voyage, the Titanic sank after colliding with an iceberg, killing 1502 out of 2224 passengers and crew. This sensational tragedy shocked the international community and led to better safety regulations for ships.

One of the reasons that the shipwreck led to such loss of life was that there were not enough lifeboats for the passengers and crew. Although there was some element of luck involved in surviving the sinking, some groups of people were more likely to survive than others, such as women, children, and the upper-class.

In this challenge, Kaggle ask you to complete the analysis of what sorts of people were likely to survive. In particular, they ask you to apply the tools of machine learning to predict which passengers survived the tragedy.

Table of Contents

- 1 Preparing environment and uploading data

- 2 Exploratory Data Analysis (EDA) & Feature Engineering

- 3 Select Features

- 4 Additional Feature Engineering: Feature transformation

- 5 Modeling – Hyper Parametrization

- 5.1 Simplify Get Results

- 5.2 Logistic Regression

- 5.3 SGDClassifier

- 5.4 Linear Support Vector Classification

- 5.5 Gaussian Process Classifier (GPC)

- 5.6 Random Forest Classifier

- 5.7 AdaBoost classifier

- 5.8 K-Nearest Neighbors

- 5.9 Multi-layer Perceptron classifier

- 5.10 Gradient Boosting for Classification

- 5.11 XGBoost (eXtreme Gradient Boosting)

- 6 Finalize The Model: Stacking the Models

- 7 Conclusion

Preparing environment and uploading data¶

You can download the this python notebook and data from my github repository. The data can download on Kaggle here.

Import Packages¶

import os

import warnings

warnings.simplefilter(action = 'ignore', category=FutureWarning)

warnings.filterwarnings('ignore')

def ignore_warn(*args, **kwargs):

pass

warnings.warn = ignore_warn #ignore annoying warning (from sklearn and seaborn)

import numpy as np

import pandas as pd

import pylab

import seaborn as sns

sns.set(style="ticks", color_codes=True, font_scale=1.5)

from matplotlib import pyplot as plt

from matplotlib.ticker import FormatStrFormatter

from matplotlib.colors import ListedColormap

%matplotlib inline

import mpl_toolkits

from mpl_toolkits.mplot3d import Axes3D

import model_evaluation_utils as meu

from scipy.stats import skew, norm, probplot, boxcox

from patsy import dmatrices

import statsmodels.api as sm

from statsmodels.stats.outliers_influence import variance_inflation_factor

from sklearn.feature_selection import f_classif, chi2, SelectKBest, SelectFromModel

from boruta import BorutaPy

from rfpimp import *

from sklearn.decomposition import PCA, KernelPCA

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis as LDA

from sklearn.preprocessing import StandardScaler, PolynomialFeatures, MinMaxScaler

from sklearn.pipeline import Pipeline, make_pipeline

from sklearn.model_selection import GridSearchCV, cross_val_score, KFold, cross_val_predict, train_test_split

from sklearn.metrics import roc_auc_score, roc_curve, auc, accuracy_score

from sklearn.linear_model import LogisticRegression, SGDClassifier

from sklearn.svm import SVC, LinearSVC

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier, ExtraTreesClassifier

from sklearn.ensemble.gradient_boosting import GradientBoostingClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.neighbors import KNeighborsClassifier

import xgboost as xgb

from xgboost import XGBClassifier

from xgboost import plot_importance

#from sklearn.base import BaseEstimator, TransformerMixin, clone, ClassifierMixin

from sklearn.ensemble import VotingClassifier

from itertools import combinations

Load Datasets¶

I start with load the datasets with pandas, and concatenate them.

train = pd.read_csv('train.csv')

test = pd.read_csv('test.csv')

Test_ID = test.PassengerId

test.insert(loc=1, column='Survived', value=-1)

data = pd.concat([train, test], ignore_index=True)

Exploratory Data Analysis (EDA) & Feature Engineering¶

Take a First Look of our Data:¶

I created the function below to simplify the analysis of general characteristics of the data. Inspired on the str function of R, this function returns the types, counts, distinct, count nulls, missing ratio and uniques values of each field/feature.

If the study involve some supervised learning, this function can return the study of the correlation, for this we just need provide the independent variable to the pred parameter.

Also, if your return is stored in a variable you can evaluate it in more detail, specific of a field, or sort them from different perspectives

def rstr(df, pred=None):

obs = df.shape[0]

types = df.dtypes

counts = df.apply(lambda x: x.count())

uniques = df.apply(lambda x: [x.unique()])

nulls = df.apply(lambda x: x.isnull().sum())

distincts = df.apply(lambda x: x.unique().shape[0])

missing_ration = (df.isnull().sum()/ obs) * 100

skewness = df.skew()

kurtosis = df.kurt()

print('Data shape:', df.shape)

if pred is None:

cols = ['types', 'counts', 'distincts', 'nulls', 'missing ration', 'uniques', 'skewness', 'kurtosis']

str = pd.concat([types, counts, distincts, nulls, missing_ration, uniques, skewness, kurtosis], axis = 1)

else:

corr = df.corr()[pred]

str = pd.concat([types, counts, distincts, nulls, missing_ration, uniques, skewness, kurtosis, corr], axis = 1, sort=False)

corr_col = 'corr ' + pred

cols = ['types', 'counts', 'distincts', 'nulls', 'missing_ration', 'uniques', 'skewness', 'kurtosis', corr_col ]

str.columns = cols

dtypes = str.types.value_counts()

print('___________________________\nData types:\n',str.types.value_counts())

print('___________________________')

return str

details = rstr(data.loc[: ,'Survived' : 'Embarked'], 'Survived')

details.sort_values(by='corr Survived', ascending=False)

Data Dictionary

- Survived: 0 = No, 1 = Yes. I use -1 to can separate test data from training data.

- Fare: The passenger fare

- Parch: # of parents / children aboard the Titanic

- SibSp: # of siblings / spouses aboard the Titanic

- Age: Age in years

- Pclass: Ticket class 1 = 1st, 2 = 2nd, 3 = 3rd

- Name: Name of the passenger

- Sex: Sex of the passenger male and female

- Ticket: Ticket number

- Cabin: Cabin number

- Embarked: Port of Embarkation C = Cherbourg, Q = Queenstown, S = Southampton

The points of attention we have here are:

- Fare: Kaggle affirm that is the passenger fare, but with some data inspection in group of Tickets we discover that is the total amount fare paid for a ticket, and the existence of tickets for a group of passengers.

- Parch: The dataset defines family relations in this way…

- Parent = mother, father

- Child = daughter, son, stepdaughter, stepson

- Some children traveled only with a nanny, therefore parch=0 for them.

- SibSP: The dataset defines family relations in this way…

- Sibling = brother, sister, stepbrother, stepsister

- Spouse = husband, wife (mistresses and fiancés were ignored)

- Age: Have 20% of nulls, so we have to find a more efficient way of filling them out with just a single value, like the median for example.

- Name: is a categorical data with a high distinct values as expect. The first reaction is drop this column, but we can use it to training different data engineering techniques, to see if we can get some valuable data. Besides that, notice that has two pairs of passengers with the same name?

- Ticket Other categorical data, but in this case it have only 71% of distinct values and don’t have nulls. So, it is possible that some passenger voyage in groups and use the same ticket. Beyond that, we can check to if we can extract other interesting thought form it.

- Cabin: the high number of distinct values (187) and nulls (77.5%).

This is a categorical data of which I use to train different techniques to extract some information of value and null input, but with given the high rate of null the recommended would be to simplify the filling or even to exclude this attribute.

First see of some stats of Numeric Data¶

So, for the main statistics of our numeric data describe the function (like the summary of R)

print('Data is not balanced! Has {:2.2%} survives'.format(train.Survived.describe()[1]))

display(data.loc[: ,'Pclass' : 'Embarked'].describe().transpose())

print('Survived: [1] Survived; [0] Died; [-1] Test Data set:\n',data.Survived.value_counts())

def charts(feature, df):

print('\n ____________________________ Plots of', feature, 'per Survived and Dead: ____________________________')

# Pie of all Data

fig = plt.figure(figsize=(20,5))

f1 = fig.add_subplot(131)

cnt = df[feature].value_counts()

g = plt.pie(cnt, labels=cnt.index, autopct='%1.1f%%', shadow=True, startangle=90)

# Count Plot By Survived and Dead

f = fig.add_subplot(132)

g = sns.countplot(x=feature, hue='Survived', hue_order=[1,0], data=df, ax=f)

# Percent stacked Plot

survived = df[df['Survived']==1][feature].value_counts()

dead = df[df['Survived']==0][feature].value_counts()

df2 = pd.DataFrame([survived,dead])

df2.index = ['Survived','Dead']

df2 = df2.T

df2 = df2.fillna(0)

df2['Total'] = df2.Survived + df2.Dead

df2.Survived = df2.Survived/df2.Total

df2.Dead = df2.Dead/df2.Total

df2.drop(['Total'], axis=1, inplace=True)

f = fig.add_subplot(133)

df2.plot(kind='bar', stacked=True, ax=f)

del df2, g, f, cnt, dead, fig

Ticket¶

Since Ticket is a transaction and categorical data, the first insight is drop this feature, but we may note that it has some hidden value information. At first look, safe few cases, we could affirms that:

- families and group of persons that traveled together bought the same ticket.

- People with alphanumerics Tickets has some special treatment (crew family, employees, VIP, free tickets, etc.)

So, we start by a new feature creation to quantify the number of passengers by ticket, and join this

quantity to each passenger with the same ticket.

same_ticket = data.Ticket.value_counts()

data['qtd_same_ticket'] = data.Ticket.apply(lambda x: same_ticket[x])

del same_ticket

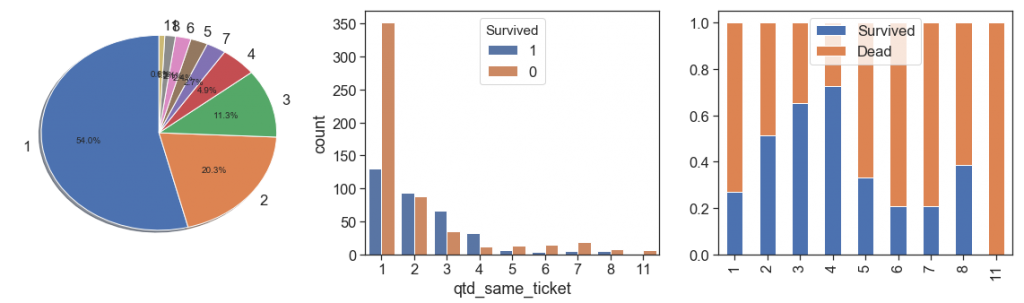

charts('qtd_same_ticket', data[data.Survived>=0])

As we can see above:

- the majority (54%) bought only one ticket per passenger, and have lower survival rate than passengers that bought tickets for 2, 3, 4, 5 and 8 people.

- the survival rate is growing between 1 and 4, dropped a lot at 5. From the bar chart we can see that after 5 the number of samples is too low (84 out of 891, 9.4%, 1/4 of this is 5), and this data is skewed with a long tail to right. We can reduce this tail by binning all data after 4 in the same ordinal, its better to prevent overfitting, but we lose some others interesting case, see the next bullet. As alternative we can apply a box cox at this measure.

- The case of 11 people with same ticket probably is a huge family that all samples on the training data died. Let’s check this below.

data[(data.qtd_same_ticket==11)]

We confirm our hypothesis, and we notice that Fare is not the price of each passenger, but the price of each ticket, its total amount. Since our data is per passenger, this information has some distortion, because only one passenger that bought a ticket alone of 69.55 pounds is different from 11 passenger that bought a special ticket, with discount for group, by 6.32 pounds per passenger. It suggest to create a new feature that represents the real fare by passenger.

Back to the quantity of persons with same ticket, if we keep this and the model can capture this pattern, you’ll probably predict that the respective test samples also died! However, even if true, can be a sign of overfitting, because we only have 1.2% of these cases in the training samples.

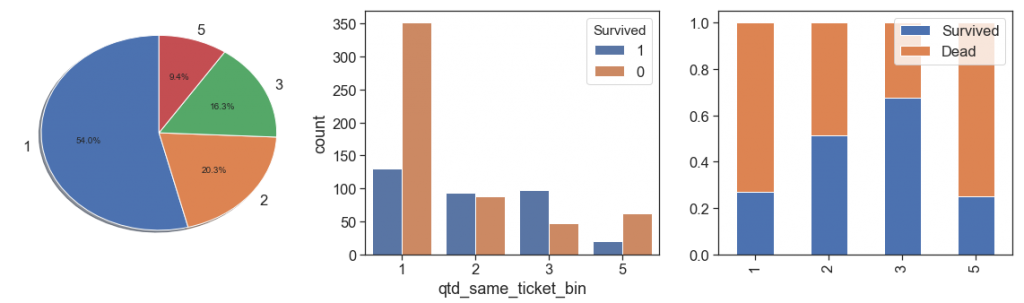

In order to increase representativeness and lose the minimum of information, since we have only 44 (4.9%) training samples that bought tickets for 4 people and 101 (11.3%) of 3, we binning the quantity of 3 and 4 together as 3 (16,3%, over than 5 as 5 (84 samples). Let’s see the results below.

data['qtd_same_ticket_bin'] = data.qtd_same_ticket.apply(lambda x: 3 if (x>2 and x<5) else (5 if x>4 else x))

charts('qtd_same_ticket_bin', data[data.Survived>=0])

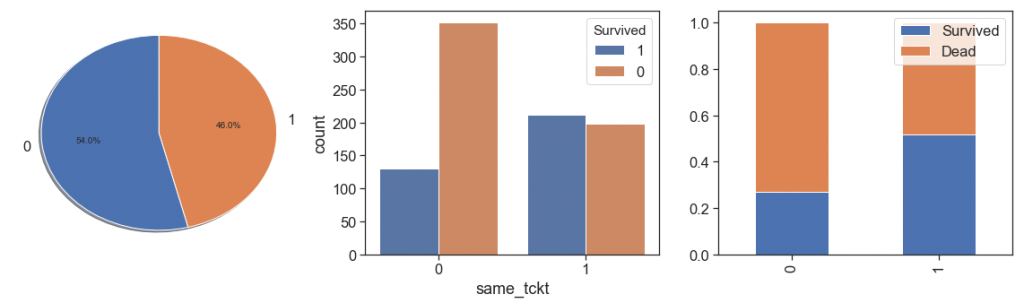

Other option, is create a binary feature that indicates if the passenger use a same ticket or not (not share his ticket)

print('Percent. survived from unique ticket: {:3.2%}'.\

format(data.Survived[(data.qtd_same_ticket==1) & (data.Survived>=0)].sum()/

data.Survived[(data.qtd_same_ticket==1) & (data.Survived>=0)].count()))

print('Percent. survived from same tickets: {:3.2%}'.\

format(data.Survived[(data.qtd_same_ticket>1) & (data.Survived>=0)].sum()/

data.Survived[(data.qtd_same_ticket>1) & (data.Survived>=0)].count()))

data['same_tckt'] = data.qtd_same_ticket.apply(lambda x: 1 if (x> 1) else 0)

charts('same_tckt', data[data.Survived>=0])

In this case we lose information that the chances of survival increase from 1 to 4, and fall from 5. In addition, cases 1 and 0 of the two measures are exactly the same. Then we will not use this option, and go work on Fare.

Finally, we have one more information to extract directly from Ticket, and check the possible special treatment!

data.Ticket.str.findall('[A-z]').apply(lambda x: ''.join(map(str, x))).value_counts().head(7)

data['distinction_in_tikect'] =\

(data.Ticket.str.findall('[A-z]').apply(lambda x: ''.join(map(str, x)).strip('[]')))

data.distinction_in_tikect = data.distinction_in_tikect.\

apply(lambda y: 'Without' if y=='' else y if (y in ['PC', 'CA', 'A', 'SOTONOQ', 'STONO', 'WC', 'SCPARIS']) else 'Others')

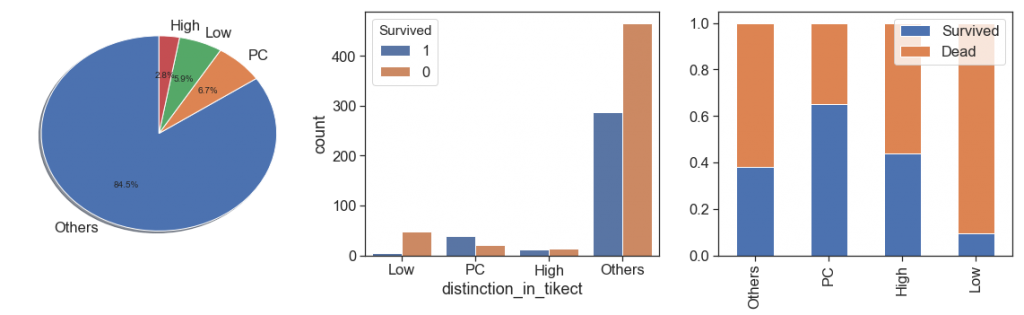

charts('distinction_in_tikect', data[(data.Survived>=0)])

By the results, passengers with PC distinction in their tickets had best survival rate. Without, Others and CA is very close and can be grouped in one category and the we can do the same between STONO and SCAPARIS, and between A, SOTONOQ and WC.

data.distinction_in_tikect = data.distinction_in_tikect.\

apply(lambda y: 'Others' if (y in ['Without', 'Others', 'CA']) else\

'Low' if (y in ['A', 'SOTONOQ', 'WC']) else\

'High' if (y in ['STONO', 'SCPARIS']) else y)

charts('distinction_in_tikect', data[(data.Survived>=0)])

Fare¶

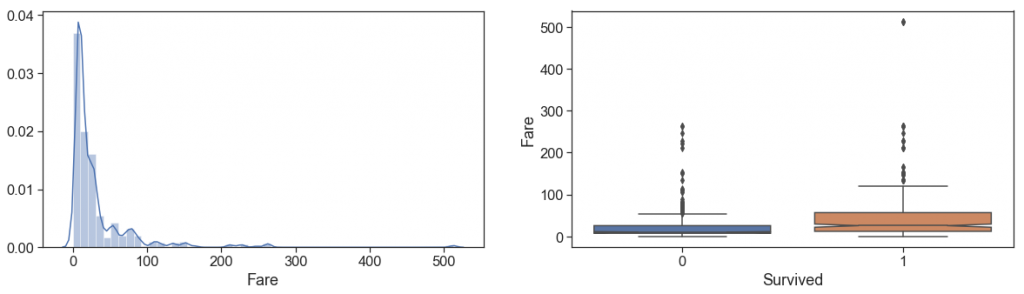

First, we treat the unique null fare case, then we take a look of the distribution of Fare (remember that is the total amount Fare of the Ticket).

# Fill null with median of most likely type passenger

data.loc[data.Fare.isnull(), 'Fare'] = data.Fare[(data.Pclass==3) & (data.qtd_same_ticket==1) & (data.Age>60)].median()

fig = plt.figure(figsize=(20,5))

f = fig.add_subplot(121)

g = sns.distplot(data[(data.Survived>=0)].Fare)

f = fig.add_subplot(122)

g = sns.boxplot(y='Fare', x='Survived', data=data[data.Survived>=0], notch = True)

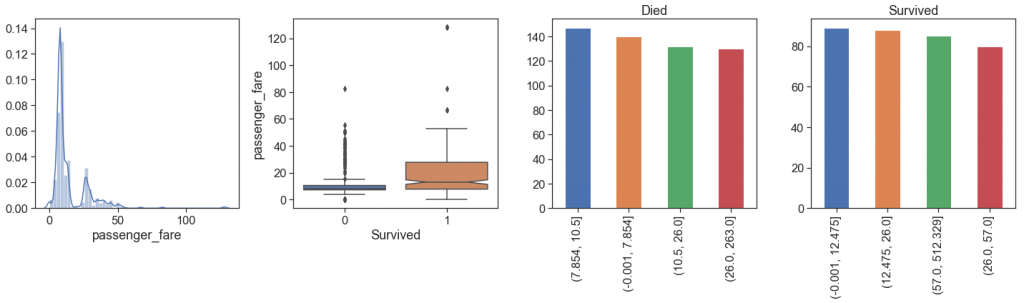

Let’s take a look at how the fare per passenger is and how much it differs from the total

data['passenger_fare'] = data.Fare / data.qtd_same_ticket

fig = plt.figure(figsize=(20,6))

a = fig.add_subplot(141)

g = sns.distplot(data[(data.Survived>=0)].passenger_fare)

a = fig.add_subplot(142)

g = sns.boxplot(y='passenger_fare', x='Survived', data=data[data.Survived>=0], notch = True)

a = fig.add_subplot(143)

g = pd.qcut(data.Fare[(data.Survived==0)], q=[.0, .25, .50, .75, 1.00]).value_counts().plot(kind='bar', ax=a, title='Died')

a = fig.add_subplot(144)

g = pd.qcut(data.Fare[(data.Survived>0)], q=[.0, .25, .50, .75, 1.00]).value_counts().plot(kind='bar', ax=a, title='Survived')

plt.tight_layout(); plt.show()

From the comparison, we can see that:

- the distributions are not exactly the same, with two spouts slightly apart on passenger fare.

- Class and how much paid per passenger make differences!

Although the number of survivors among the quartiles is approximately the same as expected, when we look at passenger fares, it is more apparent that the mortality rate is higher in the lower Fares, since the top of Q4 died is at the same height as the median plus a confidence interval of the fare paid by survivors.

- the number of outliers is lower in the fare per passenger, especially among survivors.

We can not rule out these outliers if there are cases of the same type in the test data set. In addition, these differences in values may be due to probably first class with additional fees for certain exclusives and cargo.

Below, you can see that the largest outlier all survival in the train data set, and has one case (1235 Passenger Id,

the matriarch of one son and two companions) to predict. Among all outlier cases of survivors, we see that all cases are first class, and different from the largest outlier, 27% actually died, and we have 18 cases to predict.

print('Passengers with higets passenger fare:')

display(data[data.passenger_fare>120])

print('\nSurivived of passenger fare more than 50:\n',

pd.pivot_table(data.loc[data.passenger_fare>50, ['Pclass', 'Survived']], aggfunc=np.count_nonzero,

columns=['Survived'] , index=['Pclass']))

Note that if we leave this way, if the model succeeds in capturing this pattern of largest outlier we are again thinking of a model that is at risk of overfitting (0.03% of cases).

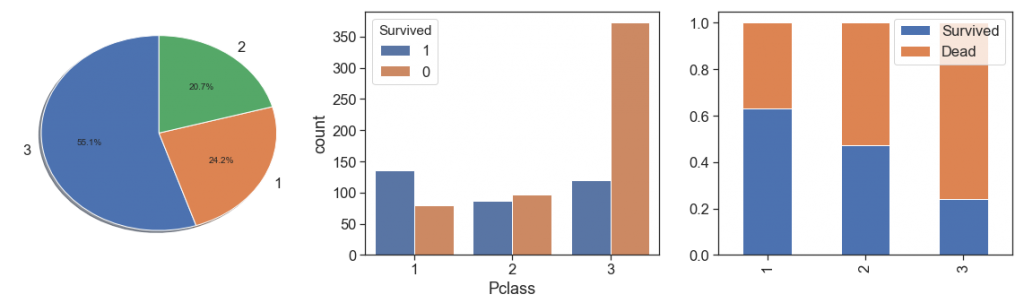

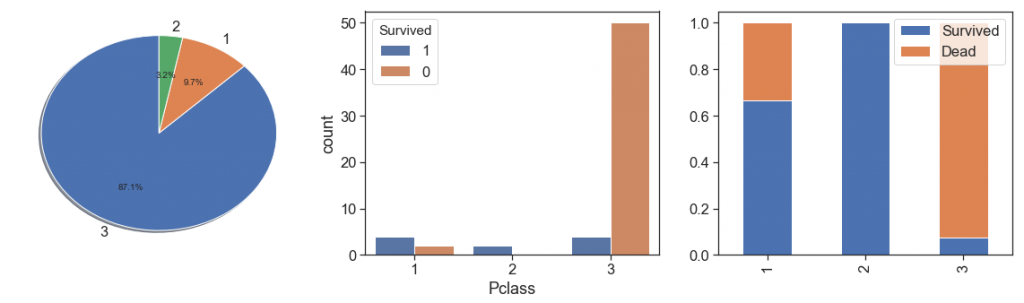

Pclass¶

Notwithstanding the fact that class 3 presents greater magnitude, as we see with Fare by passenger, we notice that survival rate is greater with greater fare by passenger. Its make to think that has some socioeconomic discrimination. It is confirmed when we saw the data distribution over the classes, and see the percent bar has a clearer aggressive decreasing survival rate through the first to the third classes.

charts('Pclass', data[(data.Survived>=0)])

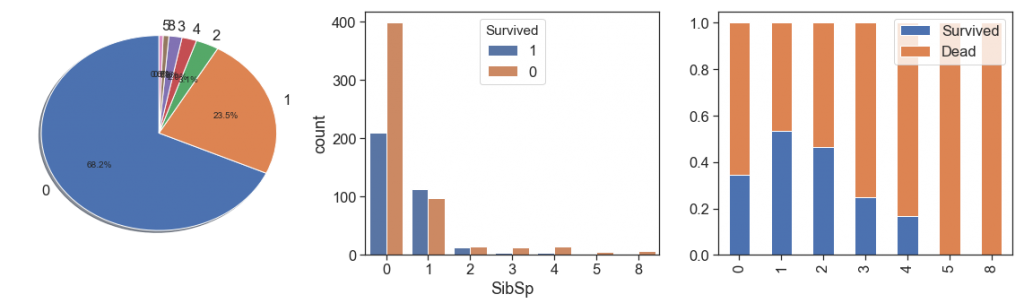

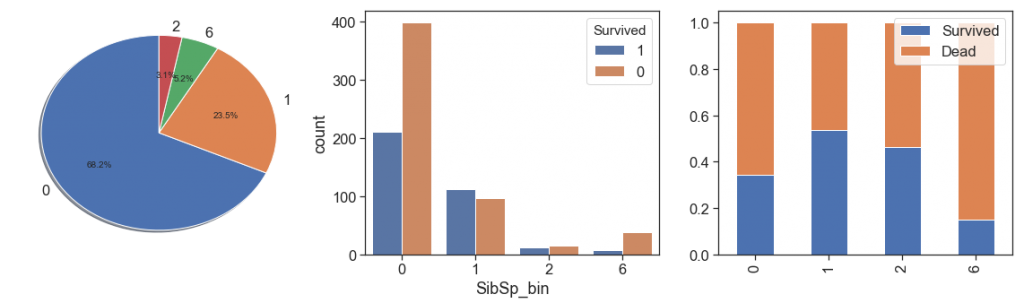

SibSp¶

charts('SibSp', data[(data.Survived>=0)])

Since more than 2 siblings has too few cases and lowest survival rate, we can aggregate all this case into unique bin in order to increase representativeness and lose the minimum of information.

data['SibSp_bin'] = data.SibSp.apply(lambda x: 6 if x > 2 else x)

charts('SibSp_bin', data[(data.Survived>=0)])

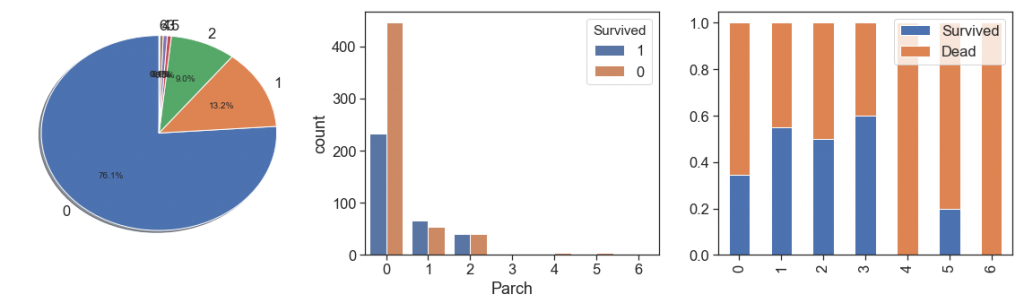

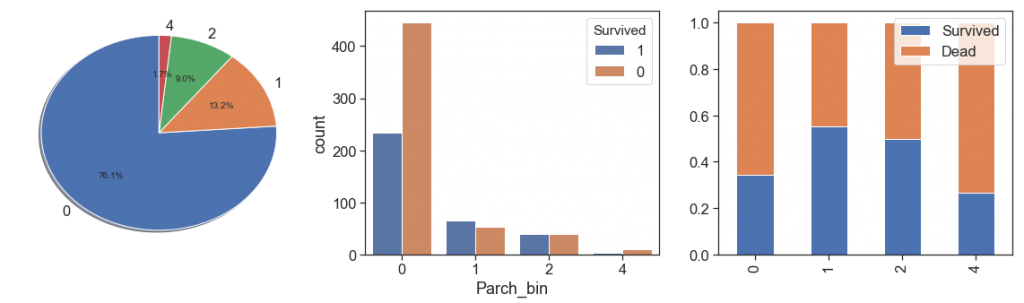

Parch¶

charts('Parch', data[data.Survived>=0])

As we did with siblings, we will aggregate the Parch cases with more than 3, even with the highest survival rate with 3 Parch.

data['Parch_bin'] = data.Parch.apply(lambda x: x if x< 3 else 4)

charts('Parch_bin', data[(data.Survived>=0)])

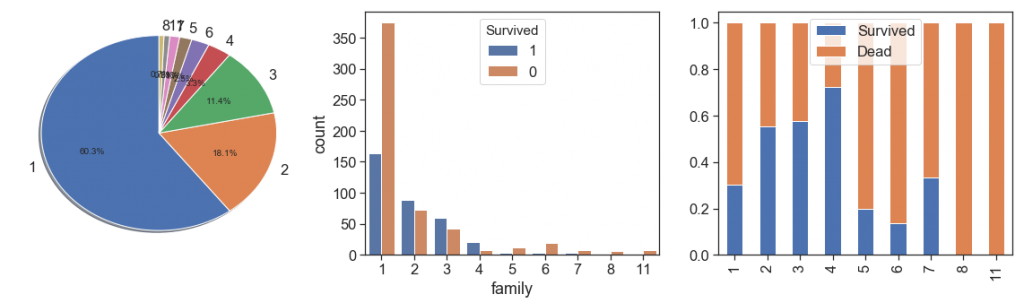

Family and non-relatives¶

If you investigate the data, you will notice that total family members It can be obtained by the sum of Parch and SibSp plus 1 (1 for the person of respective record). So, let’s create the Family and see what we get.

data['family'] = data.SibSp + data.Parch + 1

charts('family', data[data.Survived>=0])

As we can see, family groups of up to 4 people were more likely to survive than people without relatives on board.

However from 5 family members we see a drastic fall and the leveling of the 7-member cases with the unfamiliar ones.

You may be led to think that this distortion clearly has some relation to the social condition. Better see the right data!

charts('Pclass', data[(data.family>4) & (data.Survived>=0)])

Yes, we have more cases in the third class, but on the other hand, what we see is that the numbers of cases with more than 4 relatives were rarer. n a more careful look, you will see that from 6 family members we only have third class (25 in training, 10 in test). So we confirmed that a large number of family members made a difference, yes, if you were from upper classes

You must have a feeling of déjà vu, and yes, this metric is very similar to the one we have already created, the amount of passengers with the same ticket.

So what’s the difference. At first you have only the amount of people aboard with family kinship plus herself, in the previous you have people reportedly grouped, family members or not. So, in cases where relatives bought tickets separately we see the family considering them, but the ticket separating them. On the other hand, as a family we do not consider travelers with their non-family companions, employees or friends, while in the other yes.

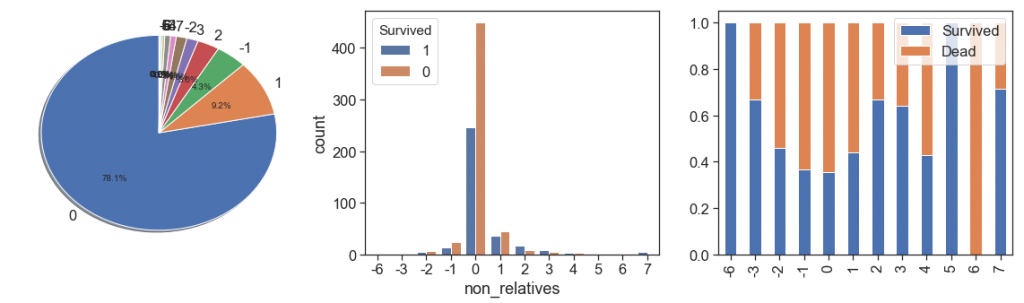

With this, we can now obtain the number of fellows or companions per passenger. This is the number of non-relatives who traveled with the passenger

data['non_relatives'] = data.qtd_same_ticket - data.family

charts('non_relatives', data[data.Survived>=0])

Here you see negative numbers because there are groups of travelers with the number of unrelated members larger than those with kinship.

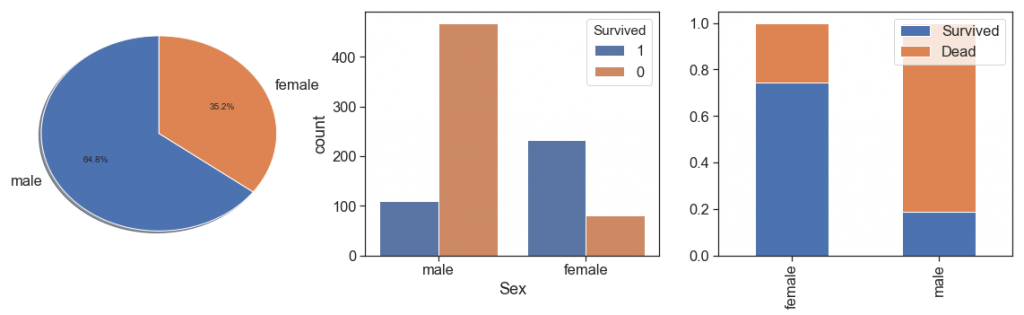

Sex¶

As everybody knows, in that case women has more significant survival rate than men.

charts('Sex', data[(data.Survived>=0)])

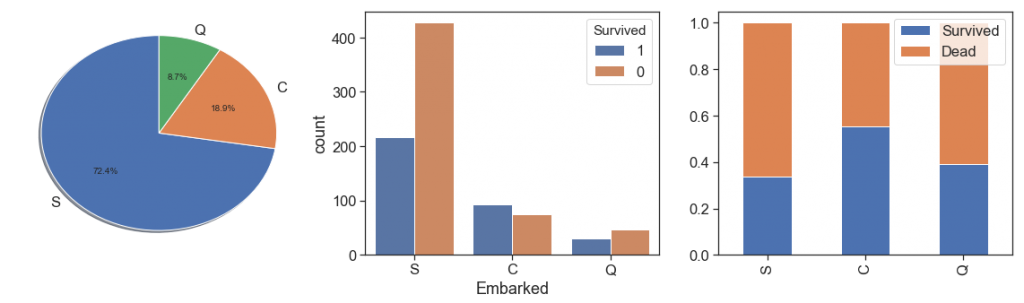

Embarked¶

First, we check the 2 embarked null cases to find the most likely pattern to considerate to fill with the respective mode.

In sequence, we take a look at the Embarked data. As we can see, the passengers that embarked from Cherbourg had best survival rates and most of the passengers embarked from Southampton and had the worst survival rate.

display(data[data.Embarked.isnull()])

data.loc[data.Embarked=='NA', 'Embarked'] = data[(data.Cabin.str.match('B2')>0) & (data.Pclass==1)].Embarked.mode()[0]

charts('Embarked', data[(data.Survived>=0)])

Name¶

![]()

Name feature has too much variance and is not significant, but has some value information to extracts and checks, like:

- Personal Titles

- Existence of nicknames

- Existence of references to another person

- Family names

def Personal_Titles(df):

df['Personal_Titles'] = df.Name.str.findall('Mrs\.|Mr\.|Miss\.|Maste[r]|Dr\.|Lady\.|Countess\.|'

+'Sir\.|Rev\.|Don\.|Major\.|Col\.|Jonkheer\.|'

+ 'Capt\.|Ms\.|Mme\.|Mlle\.').apply(lambda x: ''.join(map(str, x)).strip('[]'))

df.Personal_Titles[df.Personal_Titles=='Mrs.'] = 'Mrs'

df.Personal_Titles[df.Personal_Titles=='Mr.'] = 'Mr'

df.Personal_Titles[df.Personal_Titles=='Miss.'] = 'Miss'

df.Personal_Titles[df.Personal_Titles==''] = df[df.Personal_Titles==''].Sex.apply(lambda x: 'Mr' if (x=='male') else 'Mrs')

df.Personal_Titles[df.Personal_Titles=='Mme.'] = 'Mrs'

df.Personal_Titles[df.Personal_Titles=='Ms.'] = 'Mrs'

df.Personal_Titles[df.Personal_Titles=='Lady.'] = 'Royalty'

df.Personal_Titles[df.Personal_Titles=='Mlle.'] = 'Miss'

df.Personal_Titles[(df.Personal_Titles=='Miss.') & (df.Age>-1) & (df.Age<15)] = 'Kid'

df.Personal_Titles[df.Personal_Titles=='Master'] = 'Kid'

df.Personal_Titles[df.Personal_Titles=='Don.'] = 'Royalty'

df.Personal_Titles[df.Personal_Titles=='Jonkheer.'] = 'Royalty'

df.Personal_Titles[df.Personal_Titles=='Capt.'] = 'Technical'

df.Personal_Titles[df.Personal_Titles=='Rev.'] = 'Technical'

df.Personal_Titles[df.Personal_Titles=='Sir.'] = 'Royalty'

df.Personal_Titles[df.Personal_Titles=='Countess.'] = 'Royalty'

df.Personal_Titles[df.Personal_Titles=='Major.'] = 'Technical'

df.Personal_Titles[df.Personal_Titles=='Col.'] = 'Technical'

df.Personal_Titles[df.Personal_Titles=='Dr.'] = 'Technical'

Personal_Titles(data)

display(pd.pivot_table(data[['Personal_Titles', 'Survived']], aggfunc=np.count_nonzero,

columns=['Survived'] , index=['Personal_Titles']).T)

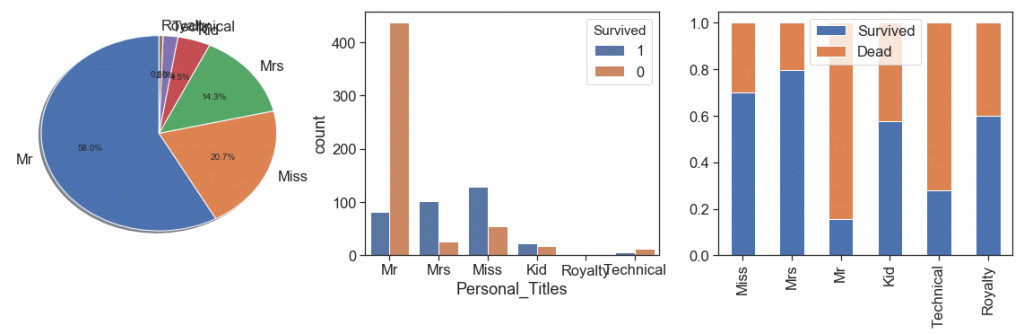

charts('Personal_Titles', data[(data.Survived>=0)])

As you can see above, I opted to add some titles, but at first keep 2 small sets (Technical and Royalty), Because there are interesting survival rate variations.

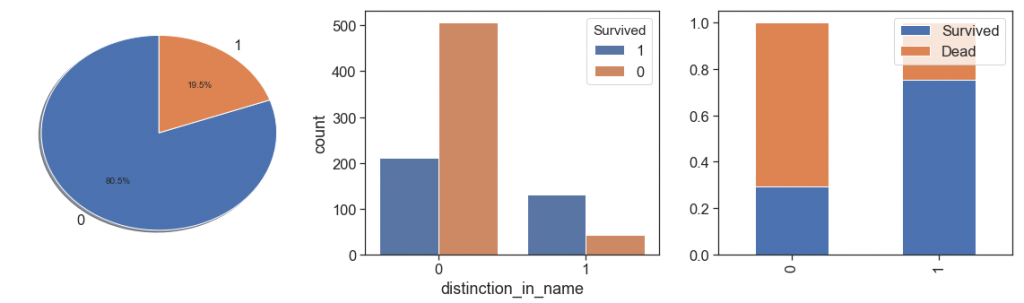

Next, we identify the names with mentions to other people or with nicknames and create a boolean feature.

data['distinction_in_name'] =\

((data.Name.str.findall('\(').apply(lambda x: ''.join(map(str, x)).strip('[]'))=='(')

| (data.Name.str.findall(r'"[A-z"]*"').apply(lambda x: ''.join(map(str, x)).strip('""'))!=''))

data.distinction_in_name = data.distinction_in_name.apply(lambda x: 1 if x else 0)

charts('distinction_in_name', data[(data.Survived>=0)])

It is interesting to note that those who have some type of reference or distinction in their names had a higher survival rate.

Next, we find 872 surnames in this dataset. Even adding loners in a single category, we have 229 with more than one member. It’s a huge categorical data to work, and it is to much sparse. The most of then has too few samples to really has significances to almost of algorithms, without risk to occurs overfitting. In addition, there are 18 surnames cases with more than one member exclusively in the test data set.

So, we create this feature with aggregation of unique member into one category and use this at models that could work on it to check if we get better results. Alternatively, we can use dimensionality reduction methods.

print('Total of differents surnames aboard:',

((data.Name.str.findall(r'[A-z]*\,').apply(lambda x: ''.join(map(str, x)).strip(','))).value_counts()>1).shape[0])

print('More then one persons aboard with smae surnames:',

((data.Name.str.findall(r'[A-z]*\,').apply(lambda x: ''.join(map(str, x)).strip(','))).value_counts()>1).sum())

surnames = (data.Name.str.findall(r'[A-z]*\,').apply(lambda x: ''.join(map(str, x)).strip(','))).value_counts()

data['surname'] = (data.Name.str.findall(r'[A-z]*\,').\

apply(lambda x: ''.join(map(str, x)).strip(','))).apply(lambda x: x if surnames.get_value(x)>1 else 'Alone')

test_surnames = set(data.surname[data.Survived>=0].unique().tolist())

print('Surnames with more than one member aboard that happens only in the test data set:',

240-len(test_surnames))

train_surnames = set(data.surname[data.Survived<0].unique().tolist())

print('Surnames with more than one member aboard that happens only in the train data set:',

240-len(train_surnames))

both_surnames = test_surnames.intersection(train_surnames)

data.surname = data.surname.apply(lambda x : x if test_surnames.issuperset(set([x])) else 'Exclude')

del surnames, both_surnames, test_surnames, train_surnames

CabinByTicket = data.loc[~data.Cabin.isnull(), ['Ticket', 'Cabin']].groupby(by='Ticket').agg(min)

before = data.Cabin.isnull().sum()

data.loc[data.Cabin.isnull(), 'Cabin'] = data.loc[data.Cabin.isnull(), 'Ticket'].\

apply(lambda x: CabinByTicket[CabinByTicket.index==x].min())

print('Cabin nulls reduced:', (before - data.Cabin.isnull().sum()))

del CabinByTicket, before

data.Cabin[data.Cabin.isnull()] = 'N999'

data['Cabin_Letter'] = data.Cabin.str.findall('[^a-z]\d\d*')

data['Cabin_Number'] = data.apply(lambda x: 0 if len(str(x.Cabin))== 1 else np.int(np.int(x.Cabin_Letter[0][1:])/10), axis=1)

data.Cabin_Letter = data.apply(lambda x: x.Cabin if len(str(x.Cabin))== 1 else x.Cabin_Letter[0][0], axis=1)

display(data[['Fare', 'Cabin_Letter']].groupby(['Cabin_Letter']).agg([np.median, np.mean, np.count_nonzero, np.max, np.min]))

Doesn’t exist Cabin T in test dataset. This passenger is from first class and his passenger fare is the same from others 5 first class passengers. So, changed to ‘C’ to made same distribution between the six.

display(data[data.Cabin=='T'])

display(data.Cabin_Letter[data.passenger_fare==35.5].value_counts())

data.Cabin_Letter[data.Cabin_Letter=='T'] = 'C'

Fill Cabins letters NAs of third class with most common patterns of the same passenger fare range with one or lessen possible cases.

data.loc[(data.passenger_fare<6.237) & (data.passenger_fare>=0.0) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare<6.237) & (data.passenger_fare>=0.0) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare<6.237) & (data.passenger_fare>=0.0) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare<6.237) & (data.passenger_fare>=0.0) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare<7.225) & (data.passenger_fare>=6.237) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare<7.225) & (data.passenger_fare>=6.237) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare<7.225) & (data.passenger_fare>=6.237) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare<7.225) & (data.passenger_fare>=6.237) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare<7.65) & (data.passenger_fare>=7.225) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare<7.65) & (data.passenger_fare>=7.225) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare<7.65) & (data.passenger_fare>=7.225) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare<7.65) & (data.passenger_fare>=7.225) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.min()

data.loc[(data.passenger_fare<7.75) & (data.passenger_fare>=7.65) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare<7.75) & (data.passenger_fare>=7.65) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare<7.75) & (data.passenger_fare>=7.65) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare<7.75) & (data.passenger_fare>=7.65) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.min()

data.loc[(data.passenger_fare<8.0) & (data.passenger_fare>=7.75) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare<8.0) & (data.passenger_fare>=7.75) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare<8.0) & (data.passenger_fare>=7.75) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare<8.0) & (data.passenger_fare>=7.75) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.min()

data.loc[(data.passenger_fare>=8.0) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=8.0) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=8.0) & (data.Pclass==3) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=8.0) & (data.Pclass==3) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

Fill Cabins letters NAs of second class with most common patterns of the same passenger fare range with one or lessen possible cases.

data.loc[(data.passenger_fare>=0) & (data.passenger_fare<8.59) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=0) & (data.passenger_fare<8.59) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=0) & (data.passenger_fare<8.59) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=0) & (data.passenger_fare<8.59) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=8.59) & (data.passenger_fare<10.5) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=8.59) & (data.passenger_fare<10.5) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=8.59) & (data.passenger_fare<10.5) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=8.59) & (data.passenger_fare<10.5) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=10.5) & (data.passenger_fare<10.501) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=10.5) & (data.passenger_fare<10.501) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=10.5) & (data.passenger_fare<10.501) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=10.5) & (data.passenger_fare<10.501) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=10.501) & (data.passenger_fare<12.5) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=10.501) & (data.passenger_fare<12.5) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=10.501) & (data.passenger_fare<12.5) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=10.501) & (data.passenger_fare<12.5) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=12.5) & (data.passenger_fare<13.) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=12.5) & (data.passenger_fare<13.) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=12.5) & (data.passenger_fare<13.) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=12.5) & (data.passenger_fare<13.) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=13.) & (data.passenger_fare<13.1) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=13.) & (data.passenger_fare<13.1) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=13.) & (data.passenger_fare<13.1) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=13.) & (data.passenger_fare<13.1) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>=13.1) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>=13.1) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>=13.1) & (data.Pclass==2) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>=13.1) & (data.Pclass==2) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

Fill Cabins letters NAs of first class with most common patterns of the same passenger fare range with one or lessen possible cases.

data.loc[(data.passenger_fare==0) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare==0) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare==0) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare==0) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>0) & (data.passenger_fare<=19.69) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>0) & (data.passenger_fare<=19.69) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>0) & (data.passenger_fare<=19.69) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>0) & (data.passenger_fare<=19.69) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>19.69) & (data.passenger_fare<=23.374) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>19.69) & (data.passenger_fare<=23.374) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>19.69) & (data.passenger_fare<=23.374) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>19.69) & (data.passenger_fare<=23.374) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>23.374) & (data.passenger_fare<=25.25) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>23.374) & (data.passenger_fare<=25.25) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>23.374) & (data.passenger_fare<=25.25) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>23.374) & (data.passenger_fare<=25.25) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>25.69) & (data.passenger_fare<=25.929) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>25.69) & (data.passenger_fare<=25.929) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>25.69) & (data.passenger_fare<=25.929) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>25.69) & (data.passenger_fare<=25.929) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>25.99) & (data.passenger_fare<=26.) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>25.99) & (data.passenger_fare<=26.) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>25.99) & (data.passenger_fare<=26.) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>25.99) & (data.passenger_fare<=26.) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>26.549) & (data.passenger_fare<=26.55) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>26.549) & (data.passenger_fare<=26.55) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>26.549) & (data.passenger_fare<=26.55) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>26.549) & (data.passenger_fare<=26.55) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>27.4) & (data.passenger_fare<=27.5) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>27.4) & (data.passenger_fare<=27.5) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>27.4) & (data.passenger_fare<=27.5) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>27.4) & (data.passenger_fare<=27.5) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>27.7207) & (data.passenger_fare<=27.7208) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>27.7207) & (data.passenger_fare<=27.7208) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>27.7207) & (data.passenger_fare<=27.7208) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>27.7207) & (data.passenger_fare<=27.7208) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>29.69) & (data.passenger_fare<=29.7) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>29.69) & (data.passenger_fare<=29.7) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>29.69) & (data.passenger_fare<=29.7) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>29.69) & (data.passenger_fare<=29.7) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>30.49) & (data.passenger_fare<=30.5) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>30.49) & (data.passenger_fare<=30.5) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>30.49) & (data.passenger_fare<=30.5) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>30.49) & (data.passenger_fare<=30.5) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>30.6) & (data.passenger_fare<=30.7) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>30.6) & (data.passenger_fare<=30.7) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>30.6) & (data.passenger_fare<=30.7) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>30.6) & (data.passenger_fare<=30.7) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>31.67) & (data.passenger_fare<=31.684) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>31.67) & (data.passenger_fare<=31.684) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>31.67) & (data.passenger_fare<=31.684) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>31.67) & (data.passenger_fare<=31.684) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>39.599) & (data.passenger_fare<=39.6) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>39.599) & (data.passenger_fare<=39.6) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>39.599) & (data.passenger_fare<=39.6) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>39.599) & (data.passenger_fare<=39.6) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>41) & (data.passenger_fare<=41.2) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>41) & (data.passenger_fare<=41.2) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>41) & (data.passenger_fare<=41.2) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>41) & (data.passenger_fare<=41.2) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>45.49) & (data.passenger_fare<=45.51) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>45.49) & (data.passenger_fare<=45.51) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>45.49) & (data.passenger_fare<=45.51) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>45.49) & (data.passenger_fare<=45.51) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>49.5) & (data.passenger_fare<=49.51) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>49.5) & (data.passenger_fare<=49.51) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>49.5) & (data.passenger_fare<=49.51) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>49.5) & (data.passenger_fare<=49.51) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

data.loc[(data.passenger_fare>65) & (data.passenger_fare<=70) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Letter'] =\

data[(data.passenger_fare>65) & (data.passenger_fare<=70) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Letter.mode()[0]

data.loc[(data.passenger_fare>65) & (data.passenger_fare<=70) & (data.Pclass==1) & (data.Cabin=='N999'), 'Cabin_Number'] =\

data[(data.passenger_fare>65) & (data.passenger_fare<=70) & (data.Pclass==1) & (data.Cabin!='N999')].Cabin_Number.mode()[0]

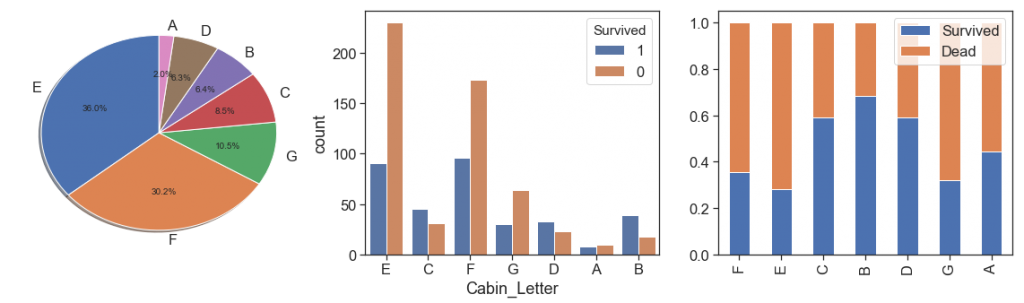

See below that we conquered a good results after filling nulls, but we need attention since they have too many nulls originally. In addition, the cabin may actually have made more difference in the deaths caused by the impact and not so much among those who drowned.

charts('Cabin_Letter', data[(data.Survived>=0)])

Rescue of family relationships¶

After some work, we notice that is difficult to understand SibSp and Patch isolated, and is difficult to extract directly families relationships from this data without a closer look.

So, in that configuration we not have clearly families relationships, and this information is primary to use for apply ages to ages with null with better distribution and accuracy.

Let’s start to rescue:

The first treatment, I discovered when check the results and noticed that I didn’t apply any relationship to a one case. Look the details below, we can see that is a case of a family with more than one ticket and the son has no age. So, I just manually applied this one case as a son, since the others member the father and the mother, and the son has the pattern 0 in SibSp and Parch 2

display(data[data.Name.str.findall('Bourke').apply(lambda x: ''.join(map(str, x)).strip('[]'))=='Bourke'])

family_w_age = data.Ticket[(data.Parch>0) & (data.SibSp>0) & (data.Age==-1)].unique().tolist()

data[data.Ticket.isin(family_w_age)].sort_values('Ticket')

data['sons'] = data.apply(lambda x : \

1 if ((x.Ticket in (['2661', '2668', 'A/5. 851', '4133'])) & (x.SibSp>0)) else 0, axis=1)

data.sons += data.apply(lambda x : \

1 if ((x.Ticket in (['CA. 2343'])) & (x.SibSp>1)) else 0, axis=1)

data.sons += data.apply(lambda x : \

1 if ((x.Ticket in (['W./C. 6607'])) & (x.Personal_Titles not in (['Mr', 'Mrs']))) else 0, axis=1)

data.sons += data.apply(lambda x: 1 if ((x.Parch>0) & (x.Age>=0) & (x.Age<20)) else 0, axis=1)

data.sons.loc[data.PassengerId==594] = 1 # Sun with diferente pattern (family with two tickets)

data.sons.loc[data.PassengerId==1252] = 1 # Case of 'CA. 2343' and last rule

data.sons.loc[data.PassengerId==1084] = 1 # Case of 'A/5. 851' and last rule

data.sons.loc[data.PassengerId==1231] = 1 # Case of 'A/5. 851' and last rule

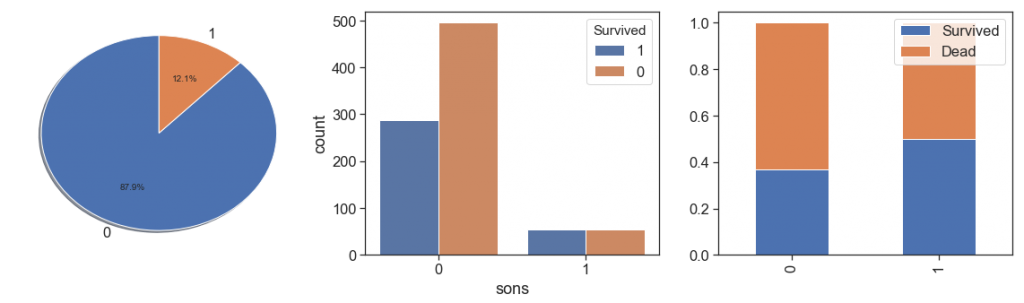

charts('sons', data[(data.Survived>=0)])

We observe that has only 12.1% of sons, and their had better survival rate than others.

Next, we rescue the parents, and check cases where we have both (mother and father), and cases where we have only one aboard.

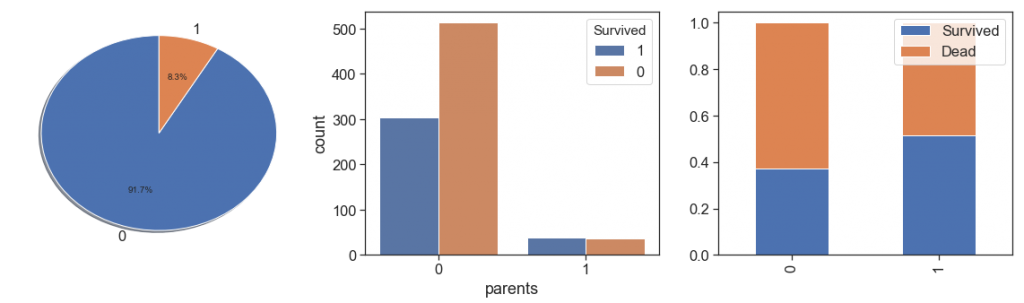

data['parents'] = data.apply(lambda x : \

1 if ((x.Ticket in (['2661', '2668', 'A/5. 851', '4133'])) & (x.SibSp==0)) else 0, axis=1)

data.parents += data.apply(lambda x : \

1 if ((x.Ticket in (['CA. 2343'])) & (x.SibSp==1)) else 0, axis=1)

data.parents += data.apply(lambda x : 1 if ((x.Ticket in (['W./C. 6607'])) & (x.Personal_Titles in (['Mr', 'Mrs']))) \

else 0, axis=1)

# Identify parents and care nulls ages

data.parents += data.apply(lambda x: 1 if ((x.Parch>0) & (x.SibSp>0) & (x.Age>19) & (x.Age<=45) ) else 0, axis=1)

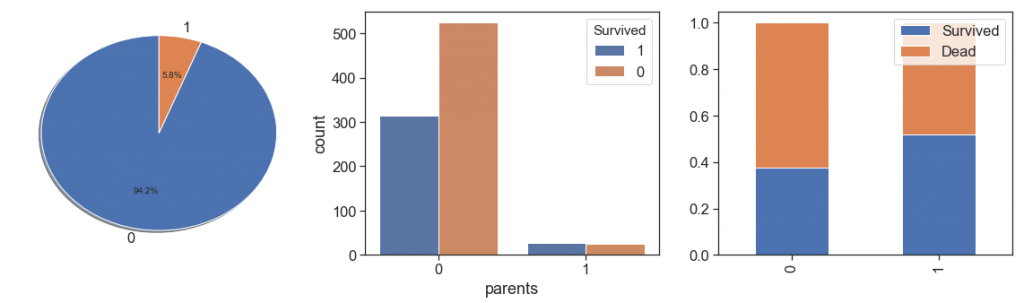

charts('parents', data[(data.Survived>=0)])

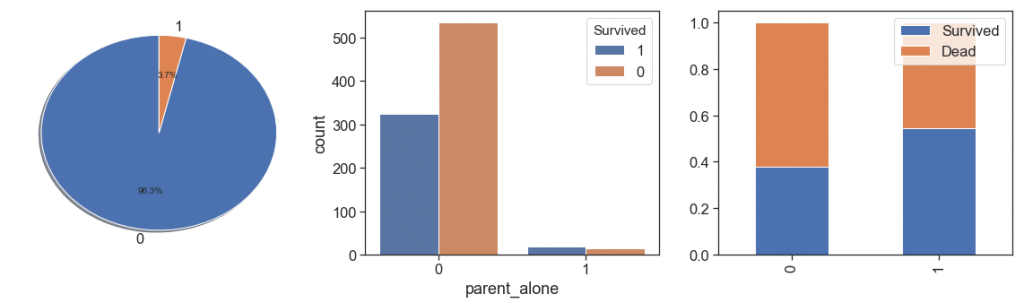

data['parent_alone'] = data.apply(lambda x: 1 if ((x.Parch>0) & (x.SibSp==0) & (x.Age>19) & (x.Age<=45) ) else 0, axis=1)

charts('parent_alone', data[(data.Survived>=0)])

We can notice that the both cases are to similar and it is not significant to has this two information separately.

Before I put them together, as I had learned in assembling the sons, I made a visual inspection and discovered some cases of sons and parents that required different rules for assigning them. As I did visually and this is not a rule for a pipeline, I proceeded with the settings manually.

t_p_alone = data.Ticket[data.parent_alone==1].tolist()

data[data.Ticket.isin(t_p_alone)].sort_values('Ticket')[96:]

data.parent_alone.loc[data.PassengerId==141] = 1

data.parent_alone.loc[data.PassengerId==541] = 0

data.sons.loc[data.PassengerId==541] = 1

data.parent_alone.loc[data.PassengerId==1078] = 0

data.sons.loc[data.PassengerId==1078] = 1

data.parent_alone.loc[data.PassengerId==98] = 0

data.sons.loc[data.PassengerId==98] = 1

data.parent_alone.loc[data.PassengerId==680] = 0

data.sons.loc[data.PassengerId==680] = 1

data.parent_alone.loc[data.PassengerId==915] = 0

data.sons.loc[data.PassengerId==915] = 1

data.parent_alone.loc[data.PassengerId==333] = 0

data.sons.loc[data.PassengerId==333] = 1

data.parent_alone.loc[data.PassengerId==119] = 0

data.sons[data.PassengerId==119] = 1

data.parent_alone.loc[data.PassengerId==319] = 0

data.sons.loc[data.PassengerId==319] = 1

data.parent_alone.loc[data.PassengerId==103] = 0

data.sons.loc[data.PassengerId==103] = 1

data.parents.loc[data.PassengerId==154] = 0

data.sons.loc[data.PassengerId==1084] = 1

data.parents.loc[data.PassengerId==581] = 0

data.sons.loc[data.PassengerId==581] = 1

data.parent_alone.loc[data.PassengerId==881] = 0

data.sons.loc[data.PassengerId==881] = 1

data.parent_alone.loc[data.PassengerId==1294] = 0

data.sons.loc[data.PassengerId==1294] = 1

data.parent_alone.loc[data.PassengerId==378] = 0

data.sons.loc[data.PassengerId==378] = 1

data.parent_alone.loc[data.PassengerId==167] = 1

data.parent_alone.loc[data.PassengerId==357] = 0

data.sons.loc[data.PassengerId==357] = 1

data.parent_alone.loc[data.PassengerId==918] = 0

data.sons.loc[data.PassengerId==918] = 1

data.parent_alone.loc[data.PassengerId==1042] = 0

data.sons.loc[data.PassengerId==1042] = 1

data.parent_alone.loc[data.PassengerId==540] = 0

data.sons.loc[data.PassengerId==540] = 1

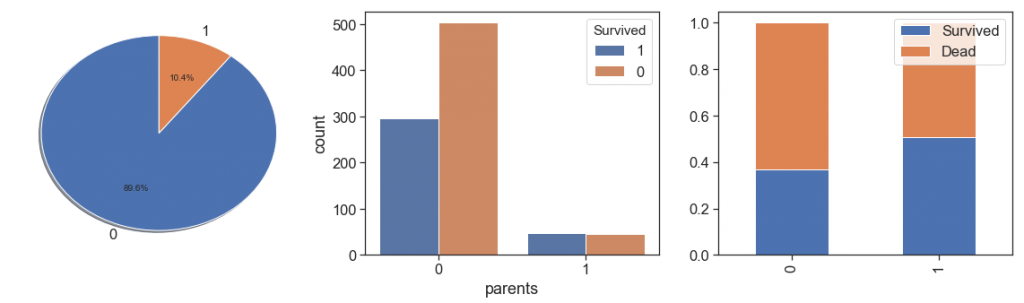

data.parents += data.parent_alone

charts('parents', data[(data.Survived>=0)])

Next, we rescue the grandparents and grandparents alone. We found the same situations with less cases and decided put all parents and grandparents in one feature and leave to age distinguish them.

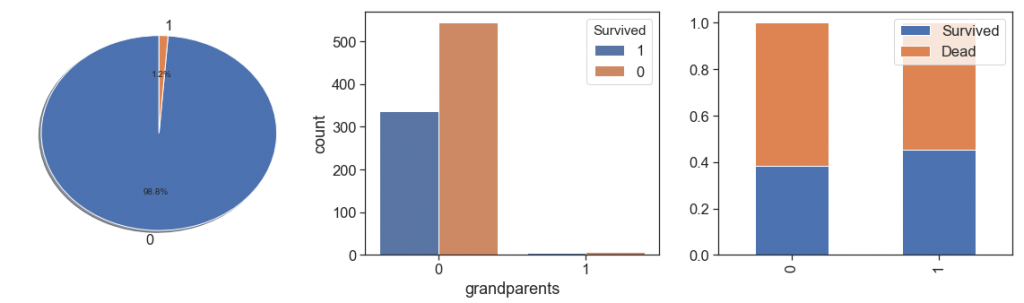

data['grandparents'] = data.apply(lambda x: 1 if ((x.Parch>0) & (x.SibSp>0) & (x.Age>19) & (x.Age>45) ) else 0, axis=1)

charts('grandparents', data[(data.Survived>=0)])

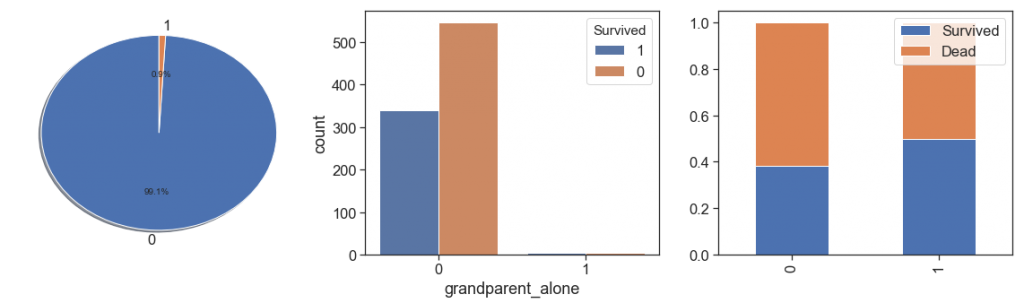

data['grandparent_alone'] = data.apply(lambda x: 1 if ((x.Parch>0) & (x.SibSp==0) & (x.Age>45) ) else 0, axis=1)

charts('grandparent_alone', data[(data.Survived>=0)])

data.parents += data.grandparent_alone + data.grandparents

charts('parents', data[(data.Survived>=0)])

Next, we identify the relatives aboard:

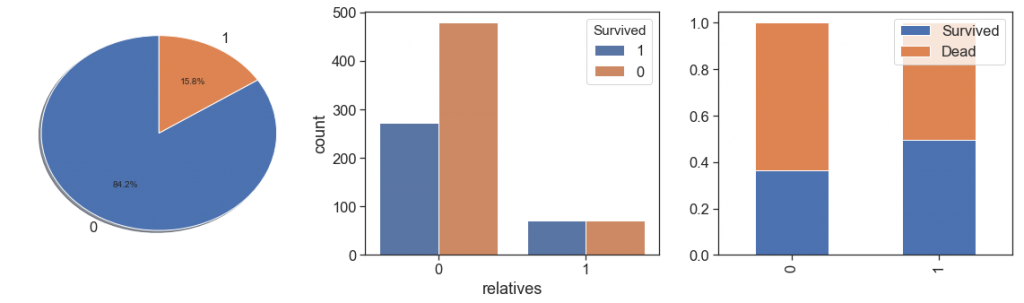

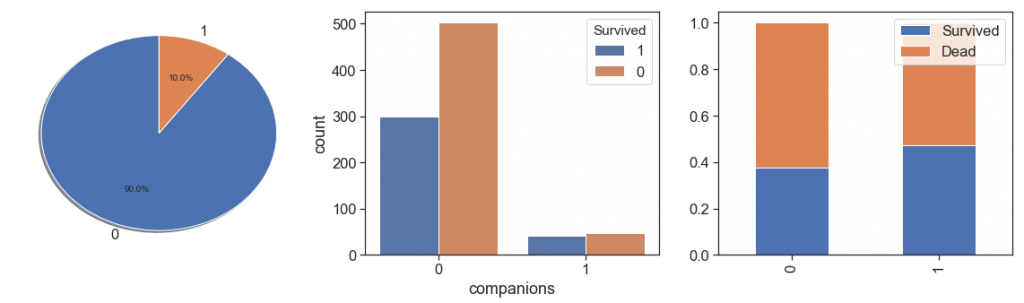

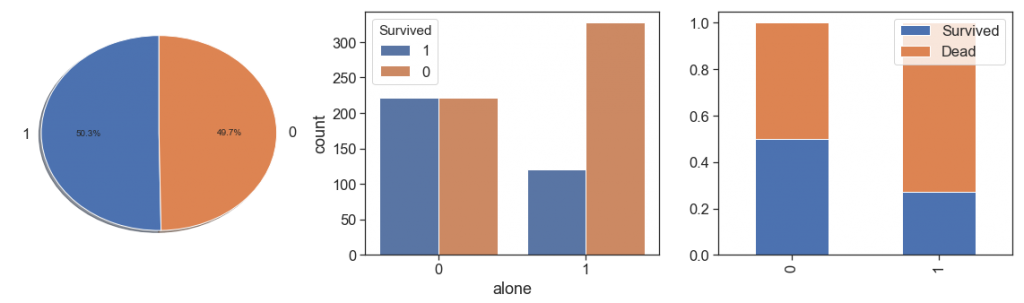

data['relatives'] = data.apply(lambda x: 1 if ((x.SibSp>0) & (x.Parch==0)) else 0, axis=1)

charts('relatives', data[(data.Survived>=0)])

And then, the companions, persons who traveled with a family but do not have family relationship with them.

data['companions'] = data.apply(lambda x: 1 if ((x.SibSp==0) & (x.Parch==0) & (x.same_tckt==1)) else 0, axis=1)

charts('companions', data[(data.Survived>=0)])

Finally, we rescue the passengers that traveled alone.

data['alone'] = data.apply(lambda x: 1 if ((x.SibSp==0) & (x.Parch==0) & (x.same_tckt==0)) else 0, axis=1)

charts('alone', data[(data.Survived>=0)])

As we can see, people with a family relationship, even if only as companions, had better survival rates and very close, than people who traveled alone.

Now we can work on issues of nulls ages and then on own information of age.

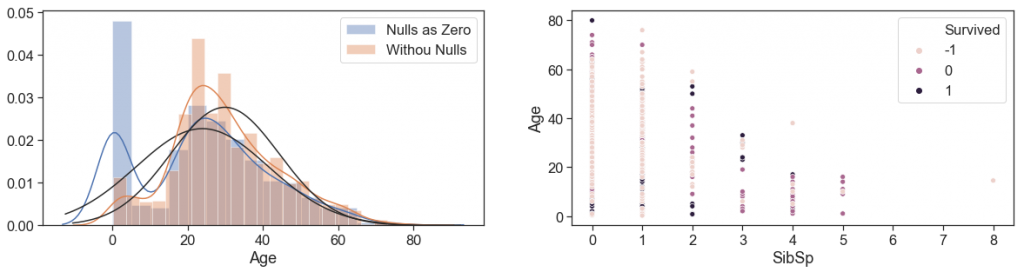

Age¶

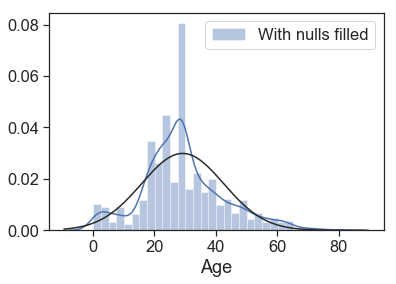

We start with the numbers of nulls case by survived to remember that is too high.

Then, we plot the distributions of Ages, to check how is fit into the normal and see the distortions when apply a unique value (zero) to the nulls cases.

Next, we made the scatter plot of Ages and siblings, and see hat age decreases with the increase in the number of siblings, but with a great range

fig = plt.figure(figsize=(20, 10))

fig1 = fig.add_subplot(221)

g = sns.distplot(data.Age.fillna(0), fit=norm, label='Nulls as Zero')

g = sns.distplot(data[~data.Age.isnull()].Age, fit=norm, label='Withou Nulls')

plt.legend(loc='upper right')

print('Survived without Age:')

display(data[data.Age.isnull()].Survived.value_counts())

fig2 = fig.add_subplot(222)

g = sns.scatterplot(data = data[(~data.Age.isnull())], y='Age', x='SibSp', hue='Survived')

From the tables below, we can see that our enforce to get Personal Titles and rescue family relationships produce better medians to apply on nulls ages.

print('Mean and median ages by siblings:')

data.loc[data.Age.isnull(), 'Age'] = -1

display(data.loc[(data.Age>=0), ['SibSp', 'Age']].groupby('SibSp').agg([np.mean, np.median]).T)

print('\nMedian ages by Personal_Titles:')

Ages = { 'Age' : {'median'}}

display(data[data.Age>=0][['Age', 'Personal_Titles', 'parents', 'grandparents', 'sons', 'relatives', 'companions', 'alone']].\

groupby('Personal_Titles').agg(Ages).T)

print('\nMedian ages by Personal Titles and Family Relationships:')

display(pd.pivot_table(data[data.Age>=0][['Age', 'Personal_Titles', 'parents', 'grandparents',

'sons', 'relatives', 'companions','alone']],

aggfunc=np.median,

index=['parents', 'grandparents', 'sons', 'relatives', 'companions', 'alone'] ,

columns=['Personal_Titles']))

print('\nNulls ages by Personal Titles and Family Relationships:')

display(data[data.Age<0][['Personal_Titles', 'parents', 'grandparents', 'sons', 'relatives', 'companions', 'alone']].\

groupby('Personal_Titles').agg([sum]))

So, we apply to the nulls ages the respectively median of same personal title and same family relationship, but before, we create a binary feature to maintain the information of the presence of nulls.

data['Without_Age'] = data.Age.apply(lambda x: 0 if x>0 else 1)

data.Age.loc[(data.Age<0) & (data.companions==1) & (data.Personal_Titles=='Miss')] = \

data.Age[(data.Age>=0) & (data.companions==1) & (data.Personal_Titles=='Miss')].median()

data.Age.loc[(data.Age<0) & (data.companions==1) & (data.Personal_Titles=='Mr')] = \

data.Age[(data.Age>=0) & (data.companions==1) & (data.Personal_Titles=='Mr')].median()

data.Age.loc[(data.Age<0) & (data.companions==1) & (data.Personal_Titles=='Mrs')] = \

data.Age[(data.Age>=0) & (data.companions==1) & (data.Personal_Titles=='Mrs')].median()

data.Age.loc[(data.Age<0) & (data.alone==1) & (data.Personal_Titles=='Kid')] = \

data.Age[(data.Age>=0) & (data.alone==1) & (data.Personal_Titles=='Kid')].median()

data.Age.loc[(data.Age<0) & (data.alone==1) & (data.Personal_Titles=='Technical')] = \

data.Age[(data.Age>=0) & (data.alone==1) & (data.Personal_Titles=='Technical')].median()

data.Age.loc[(data.Age<0) & (data.alone==1) & (data.Personal_Titles=='Miss')] = \

data.Age[(data.Age>=0) & (data.alone==1) & (data.Personal_Titles=='Miss')].median()

data.Age.loc[(data.Age<0) & (data.alone==1) & (data.Personal_Titles=='Mr')] = \

data.Age[(data.Age>=0) & (data.alone==1) & (data.Personal_Titles=='Mr')].median()

data.Age.loc[(data.Age<0) & (data.alone==1) & (data.Personal_Titles=='Mrs')] = \

data.Age[(data.Age>=0) & (data.alone==1) & (data.Personal_Titles=='Mrs')].median()

data.Age.loc[(data.Age<0) & (data.parents==1) & (data.Personal_Titles=='Mr')] = \

data.Age[(data.Age>=0) & (data.parents==1) & (data.Personal_Titles=='Mr')].median()

data.Age.loc[(data.Age<0) & (data.parents==1) & (data.Personal_Titles=='Mrs')] = \

data.Age[(data.Age>=0) & (data.parents==1) & (data.Personal_Titles=='Mrs')].median()

data.Age.loc[(data.Age<0) & (data.sons==1) & (data.Personal_Titles=='Kid')] = \

data.Age[(data.Age>=0) & (data.Personal_Titles=='Kid')].median()

data.Age.loc[(data.Age.isnull()) & (data.sons==1) & (data.Personal_Titles=='Kid')] = \

data.Age[(data.Age>=0) & (data.Personal_Titles=='Kid')].median()

data.Age.loc[(data.Age<0) & (data.sons==1) & (data.Personal_Titles=='Miss')] = \

data.Age[(data.Age>=0) & (data.sons==1) & (data.Personal_Titles=='Miss')].median()

data.Age.loc[(data.Age<0) & (data.sons==1) & (data.Personal_Titles=='Mr')] = \

data.Age[(data.Age>=0) & (data.sons==1) & (data.Personal_Titles=='Mr')].median()

data.Age.loc[(data.Age<0) & (data.sons==1) & (data.Personal_Titles=='Mrs')] = \

data.Age[(data.Age>=0) & (data.sons==1) & (data.Personal_Titles=='Mrs')].median()

data.Age.loc[(data.Age<0) & (data.relatives==1) & (data.Personal_Titles=='Miss')] = \

data.Age[(data.Age>=0) & (data.relatives==1) & (data.Personal_Titles=='Miss')].median()

data.Age.loc[(data.Age<0) & (data.relatives==1) & (data.Personal_Titles=='Mr')] = \

data.Age[(data.Age>=0) & (data.sons==1) & (data.Personal_Titles=='Mr')].median()

data.Age.loc[(data.Age<0) & (data.relatives==1) & (data.Personal_Titles=='Mrs')] = \

data.Age[(data.Age>=0) & (data.relatives==1) & (data.Personal_Titles=='Mrs')].median()

Finally, we check how age distribution lines after fill the nulls.

print('Age correlation with survived:',data.corr()['Survived'].Age)

g = sns.distplot(data.Age, fit=norm, label='With nulls filled')

plt.legend(loc='upper right')

plt.show()

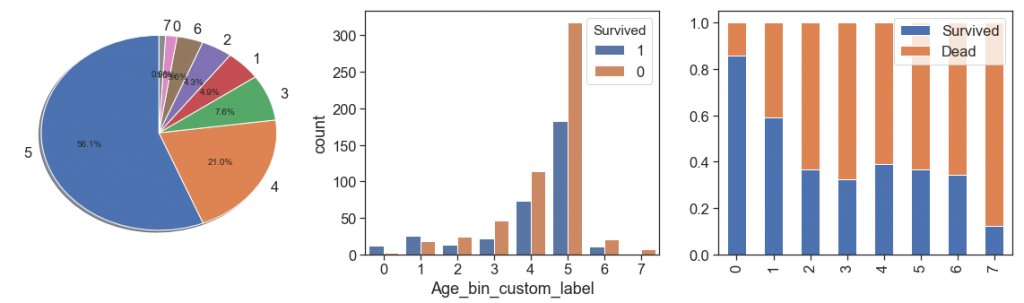

To have better understanding of age, its proportion and its relation to survival ration, we binning it as follow

def binningAge(df):

# Binning Age based on custom ranges

bin_ranges = [0, 1.7, 8, 15, 18, 25, 55, 65, 100]

bin_names = [0, 1, 2, 3, 4, 5, 6, 7]

df['Age_bin_custom_range'] = pd.cut(np.array(df.Age), bins=bin_ranges)

df['Age_bin_custom_label'] = pd.cut(np.array(df.Age), bins=bin_ranges, labels=bin_names)

return df

data = binningAge(data)

display(data[['Age', 'Age_bin_custom_range', 'Age_bin_custom_label']].sample(5))

display(pd.pivot_table(data[['Age_bin_custom_range', 'Survived']], aggfunc=np.count_nonzero,

index=['Survived'] , columns=['Age_bin_custom_range']))

charts('Age_bin_custom_label', data[(data.Survived>=0)])

One hot encode and drop provisory and useless features¶

One hot encode categorical and non ordinal data and drop useless features.

data['genre'] = data.Sex.apply(lambda x: 1 if x=='male' else 0)

data.drop(['Name', 'Cabin', 'Ticket', 'Sex', 'same_tckt', 'qtd_same_ticket', 'parent_alone', 'grandparents',

'grandparent_alone', 'Age_bin_custom_range'], axis=1, inplace=True) # , 'Age', 'Parch', 'SibSp',

data = pd.get_dummies(data, columns = ['Cabin_Letter', 'Personal_Titles', 'Embarked', 'distinction_in_tikect'])

data = pd.get_dummies(data, columns = ['surname']) # 'Age_bin_custom_label'

data.drop(['surname_Exclude'], axis=1, inplace=True)

Scipy‘s pearson method computes both the correlation and p-value for the correlation, roughly showing the probability of an uncorrelated system creating a correlation value of this magnitude.

import numpy as np

from scipy.stats import pearson

pearson(data.loc[:, ‘Pclass’], data.Survived)

Select Features¶

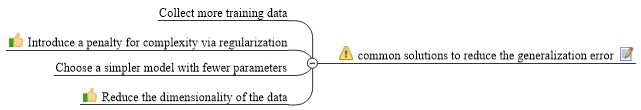

All of the features we find in the dataset might not be useful in building a machine learning model to make the necessary prediction. Using some of the features might even make the predictions worse.

Often in data science we have hundreds or even millions of features and we want a way to create a model that only includes the most important features. This has three benefits.

- It reduces the variance of the model, and therefore overfitting.

- It reduces the complexity of a model and makes it easier to interpret.

- It improves the accuracy of a model if the right subset is chosen.

- Finally, it reduces the computational cost (and time) of training a model.

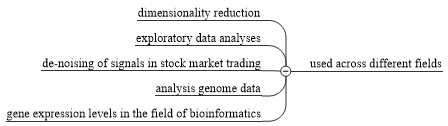

So, an alternative way to reduce the complexity of the model and avoid overfitting is dimensionality reduction via feature selection, which is especially useful for unregularized models. There are two main categories of dimensionality reduction techniques: feature selection and feature extraction. Using feature selection, we select a subset of the original features. In feature extraction, we derive information from the feature set to construct a new feature subspace.

Exist various methodologies and techniques that you can use to subset your feature space and help your models perform better and efficiently. So, let’s get started.

First check for any correlations between features¶

Correlation is a statistical term which in common usage refers to how close two features are to having a linear relationship with each other. The Pearson’s correlation which measures linear correlation between two features, the resulting value lies in [-1;1], with -1 meaning perfect negative correlation (as one feature increases, the other decreases), +1 meaning perfect positive correlation and 0 meaning no linear correlation between the two features.

Features with high correlation are more linearly dependent and hence have almost the same effect on the dependent variable. So, when two features have high correlation, we can drop one of the two features.

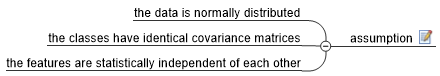

There are five assumptions that are made with respect to Pearson’s correlation:

- The feature must be either interval or ratio measurements.

- The variables must be approximately normally distributed.

- There is a linear relationship between the two variables.

- Outliers are either kept to a minimum or are removed entirely

- There is homoscedasticity of the data. Homoscedasticity basically means that the variances along the line of best fit remain similar as you move along the line.

One obvious drawback of Pearson correlation as a feature ranking mechanism is that it is only sensitive to a linear relationship. If the relation is non-linear, Pearson correlation can be close to zero even if there is a 1-1 correspondence between the two variables. For example, correlation between x and x2 is zero, when x is centered on 0.

Furthermore, relying only on the correlation value on interpreting the relationship of two variables can be highly misleading, so it is always worth plotting the data as we did on the EDA phase.

The following guidelines interpreting Pearson’s correlation coefficient (r):

| Strength of Association | r Positive | r Negative |

|---|---|---|

| Small | .1 to .3 | -0.1 to -0.3 |

| Medium | .3 to .5 | -0.3 to -0.5 |

| Large | .5 to 1.0 | -0.5 to -1.0 |

The correlation matrix is identical to a covariance matrix computed from standardized data. The correlation matrix is a square matrix that contains the Pearson product-moment correlation coefficients (often abbreviated as Pearson’s r), which measure the linear dependence between pairs of features. Pearson’s correlation coefficient can simply be calculated as the covariance between two features x and y (numerator) divided by the product of their standard deviations (denominator):

The covariance between standardized features is in fact equal to their linear correlation coefficient.

Let’s check what are the highest correlations with survived, I will now create a correlation matrix to quantify the linear relationship between the features. To do this I use NumPy’s corrcoef and seaborn’s heatmap function to plot the correlation matrix array as a heat map.

corr = data.loc[:, 'Survived':].corr()

top_corr_cols = corr[abs(corr.Survived)>=0.06].Survived.sort_values(ascending=False).keys()

top_corr = corr.loc[top_corr_cols, top_corr_cols]

dropSelf = np.zeros_like(top_corr)

dropSelf[np.triu_indices_from(dropSelf)] = True

plt.figure(figsize=(15, 15))

sns.heatmap(top_corr, cmap=sns.diverging_palette(220, 10, as_cmap=True), annot=True, fmt=".2f", mask=dropSelf)

sns.set(font_scale=0.8)

plt.show()

Let’s see we have more surnames between 0.05 and 0.06 of correlation wit Survived.

display(corr[(abs(corr.Survived)>=0.05) & (abs(corr.Survived)<0.06)].Survived.sort_values(ascending=False).keys())

del corr, dropSelf, top_corr

Drop the features with highest correlations to other Features:¶

Colinearity is the state where two variables are highly correlated and contain similar information about the variance within a given dataset. And as you see above, it is easy to find highest collinearities (Personal_Titles_Mrs, Personal_Titles_Mr and Fare.

You should always be concerned about the collinearity, regardless of the model/method being linear or not, or the main task being prediction or classification.

Assume a number of linearly correlated covariates/features present in the data set and Random Forest as the method. Obviously, random selection per node may pick only (or mostly) collinear features which may/will result in a poor split, and this can happen repeatedly, thus negatively affecting the performance.

Now, the collinear features may be less informative of the outcome than the other (non-collinear) features and as such they should be considered for elimination from the feature set anyway. However, assume that the features are ranked high in the ‘feature importance’ list produced by RF. As such they would be kept in the data set unnecessarily increasing the dimensionality. So, in practice, I’d always, as an exploratory step (out of many related) check the pairwise association of the features, including linear correlation.

Identify and treat multicollinearity:¶

Multicollinearity is more troublesome to detect because it emerges when three or more variables, which are highly correlated, are included within a model, leading to unreliable and unstable estimates of regression coefficients. To make matters worst multicollinearity can emerge even when isolated pairs of variables are not collinear.

To identify, we need start with the coefficient of determination, r2, is the square of the Pearson correlation coefficient r. The coefficient of determination, with respect to correlation, is the proportion of the variance that is shared by both variables. It gives a measure of the amount of variation that can be explained by the model (the correlation is the model). It is sometimes expressed as a percentage (e.g., 36% instead of 0.36) when we discuss the proportion of variance explained by the correlation. However, you should not write r2 = 36%, or any other percentage. You should write it as a proportion (e.g., r2 = 0.36).

Already the Variance Inflation Factor (VIF) is a measure of collinearity among predictor variables within a multiple regression. It is may be calculated for each predictor by doing a linear regression of that predictor on all the other predictors, and then obtaining the R2 from that regression. It is calculated by taking the the ratio of the variance of all a given model’s betas divide by the variance of a single beta if it were fit alone [1/(1-R2)]. Thus, a VIF of 1.8 tells us that the variance (the square of the standard error) of a particular coefficient is 80% larger than it would be if that predictor was completely uncorrelated with all the other predictors. The VIF has a lower bound of 1 but no upper bound. Authorities differ on how high the VIF has to be to constitute a problem (e.g.: 2.50 (R2 equal to 0.6), sometimes 5 (R2 equal to .8), or greater than 10 (R2 equal to 0.9) and so on).

But there are several situations in which multicollinearity can be safely ignored:

- Interaction terms and higher-order terms (e.g., squared and cubed predictors) are correlated with main effect terms because they include the main effects terms. Ops! Sometimes we use polynomials to solve problems, indeed! But keep calm, in these cases, standardizing the predictors can removed the multicollinearity.

- Indicator, like dummy or one-hot-encode, that represent a categorical variable with three or more categories. If the proportion of cases in the reference category is small, the indicator will necessarily have high VIF’s, even if the categorical is not associated with other variables in the regression model. But, you need check if some dummy is collinear or has multicollinearity with other features outside of their dummies.

- *Control feature if the feature of interest do not have high VIF’s. Here’s the thing about multicollinearity: it’s only a problem for the features that are collinear. It increases the standard errors of their coefficients, and it may make those coefficients unstable in several ways. But so long as the collinear feature are only used as control feature, and they are not collinear with your feature of interest, there’s no problem. The coefficients of the features of interest are not affected, and the performance of the control feature as controls is not impaired.

So, generally, we could run the same model twice, once with severe multicollinearity and once with moderate multicollinearity. This provides a great head-to-head comparison and it reveals the classic effects of multicollinearity. However, when standardizing your predictors doesn’t work, you can try other solutions such as:

- removing highly correlated predictors

- linearly combining predictors, such as adding them together

- running entirely different analyses, such as partial least squares regression or principal components analysis

When considering a solution, keep in mind that all remedies have potential drawbacks. If you can live with less precise coefficient estimates, or a model that has a high R-squared but few significant predictors, doing nothing can be the correct decision because it won’t impact the fit.

Given the potential for correlation among the predictors, we’ll have display the variance inflation factors (VIF), which indicate the extent to which multicollinearity is present in a regression analysis. Hence such variables need to be removed from the model. Deleting one variable at a time and then again checking the VIF for the model is the best way to do this.

So, I start the analysis removed the 3 features with he highest collinearities and the surnames different from my control surname_Alone and correlation with survived less then 0.05, and run VIF.

#Step 1: Remove the higest correlations and run a multiple regression

cols = [ 'family',

'non_relatives',

'surname_Alone',

'surname_Baclini',

'surname_Carter',

'surname_Richards',

'surname_Harper', 'surname_Beckwith', 'surname_Goldenberg',

'surname_Moor', 'surname_Chambers', 'surname_Hamalainen',

'surname_Dick', 'surname_Taylor', 'surname_Doling', 'surname_Gordon',

'surname_Beane', 'surname_Hippach', 'surname_Bishop',

'surname_Mellinger', 'surname_Yarred',

'Pclass',

'Age',

'SibSp',

'Parch',

#'Fare',

'qtd_same_ticket_bin',

'passenger_fare',

#'SibSp_bin',

#'Parch_bin',

'distinction_in_name',

'Cabin_Number',

'sons',

'parents',

'relatives',

'companions',

'alone',

'Without_Age',

'Age_bin_custom_label',

'genre',

'Cabin_Letter_A',

'Cabin_Letter_B',

'Cabin_Letter_C',

'Cabin_Letter_D',

'Cabin_Letter_E',

'Cabin_Letter_F',

'Cabin_Letter_G',

'Personal_Titles_Kid',

'Personal_Titles_Miss',

#'Personal_Titles_Mr',

#'Personal_Titles_Mrs',

'Personal_Titles_Royalty',

'Personal_Titles_Technical',

'Embarked_C',

'Embarked_Q',

'Embarked_S',

'distinction_in_tikect_High',

'distinction_in_tikect_Low',

'distinction_in_tikect_Others',

'distinction_in_tikect_PC'

]

y_train = data.Survived[data.Survived>=0]

scale = StandardScaler(with_std=False)

df = pd.DataFrame(scale.fit_transform(data.loc[data.Survived>=0, cols]), columns= cols)

features = "+".join(cols)

df2 = pd.concat([y_train, df], axis=1)

# get y and X dataframes based on this regression:

y, X = dmatrices('Survived ~' + features, data = df2, return_type='dataframe')

#Step 2: Calculate VIF Factors

# For each X, calculate VIF and save in dataframe

vif = pd.DataFrame()

vif["VIF Factor"] = [variance_inflation_factor(X.values, i) for i in range(X.shape[1])]

vif["features"] = X.columns

#Step 3: Inspect VIF Factors

display(vif.sort_values('VIF Factor'))

From the results, I conclude that can safe maintain the dummies of Embarked, but need work in the remaining features where’s the VIF stated as inf. You can see that surname Alone has a VIF of 2.2. We’re going to treat it as our baseline and exclude it from our fit. This is done to prevent multicollinearity, or the dummy variable trap caused by including a dummy variable for every single category. let’s try remove the dummy alone, that is pretty similar, and check if it solves the other dummies from its category:

#Step 1: Remove one feature with VIF on Inf from the same category and run a multiple regression

cols.remove('alone')

y_train = data.Survived[data.Survived>=0]

scale = StandardScaler(with_std=False)

df = pd.DataFrame(scale.fit_transform(data.loc[data.Survived>=0, cols]), columns= cols)

features = "+".join(cols)

df2 = pd.concat([y_train, df], axis=1)

# get y and X dataframes based on this regression:

y, X = dmatrices('Survived ~' + features, data = df2, return_type='dataframe')

#Step 2: Calculate VIF Factors

# For each X, calculate VIF and save in dataframe

vif = pd.DataFrame()

vif["VIF Factor"] = [variance_inflation_factor(X.values, i) for i in range(X.shape[1])]

vif["features"] = X.columns

#Step 3: Inspect VIF Factors

display(vif.sort_values('VIF Factor'))

To solve Cabin Letter, we can try remove only the lowest frequency ‘A’, and see if we can accept the VIF’s of others Cabins:

#Step 1: Remove one feature with VIF on Inf from the same category and run a multiple regression

cols.remove('Cabin_Letter_A')

y_train = data.Survived[data.Survived>=0]

scale = StandardScaler(with_std=False)

df = pd.DataFrame(scale.fit_transform(data.loc[data.Survived>=0, cols]), columns= cols)

features = "+".join(cols)

df2 = pd.concat([y_train, df], axis=1)

# get y and X dataframes based on this regression:

y, X = dmatrices('Survived ~' + features, data = df2, return_type='dataframe')

#Step 2: Calculate VIF Factors

# For each X, calculate VIF and save in dataframe

vif = pd.DataFrame()

vif["VIF Factor"] = [variance_inflation_factor(X.values, i) for i in range(X.shape[1])]

vif["features"] = X.columns

#Step 3: Inspect VIF Factors

display(vif.sort_values('VIF Factor'))

Now our focus is on distinct in name, since “High” has less observations, let’s try dropped it and drop the bins of Parch and SibSp.

cols.remove('distinction_in_tikect_High')

y_train = data.Survived[data.Survived>=0]

scale = StandardScaler(with_std=False)

df = pd.DataFrame(scale.fit_transform(data.loc[data.Survived>=0, cols]), columns= cols)

features = "+".join(cols)

df2 = pd.concat([y_train, df], axis=1)

# get y and X dataframes based on this regression:

y, X = dmatrices('Survived ~' + features, data = df2, return_type='dataframe')

#Step 2: Calculate VIF Factors

# For each X, calculate VIF and save in dataframe

vif = pd.DataFrame()

vif["VIF Factor"] = [variance_inflation_factor(X.values, i) for i in range(X.shape[1])]

vif["features"] = X.columns

#Step 3: Inspect VIF Factors

display(vif.sort_values('VIF Factor'))

As we can see, we now have to remove one between family, parch and SibSp. Note that non_relatives qtd_same_ticket_bin are already with relatively acceptable VIF’s. The first is directly calculated from the family and the second is very close as we have seen. So let’s discard the family.

cols.remove('family')

y_train = data.Survived[data.Survived>=0]

scale = StandardScaler(with_std=False)

df = pd.DataFrame(scale.fit_transform(data.loc[data.Survived>=0, cols]), columns= cols)

features = "+".join(cols)

df2 = pd.concat([y_train, df], axis=1)

# get y and X dataframes based on this regression:

y, X = dmatrices('Survived ~' + features, data = df2, return_type='dataframe')

#Step 2: Calculate VIF Factors

# For each X, calculate VIF and save in dataframe

vif = pd.DataFrame()

vif["VIF Factor"] = [variance_inflation_factor(X.values, i) for i in range(X.shape[1])]

vif["features"] = X.columns

#Step 3: Inspect VIF Factors

display(vif.sort_values('VIF Factor'))

Yea, we can accept, and we can proceed to the next step.

Feature Selection by Filter Methods¶

Filter methods use statistical methods for evaluation of a subset of features, they are generally used as a preprocessing step. These methods are also known as univariate feature selection, they examines each feature individually to determine the strength of the relationship of the feature with the dependent variable. These methods are simple to run and understand and are in general particularly good for gaining a better understanding of data, but not necessarily for optimizing the feature set for better generalization.